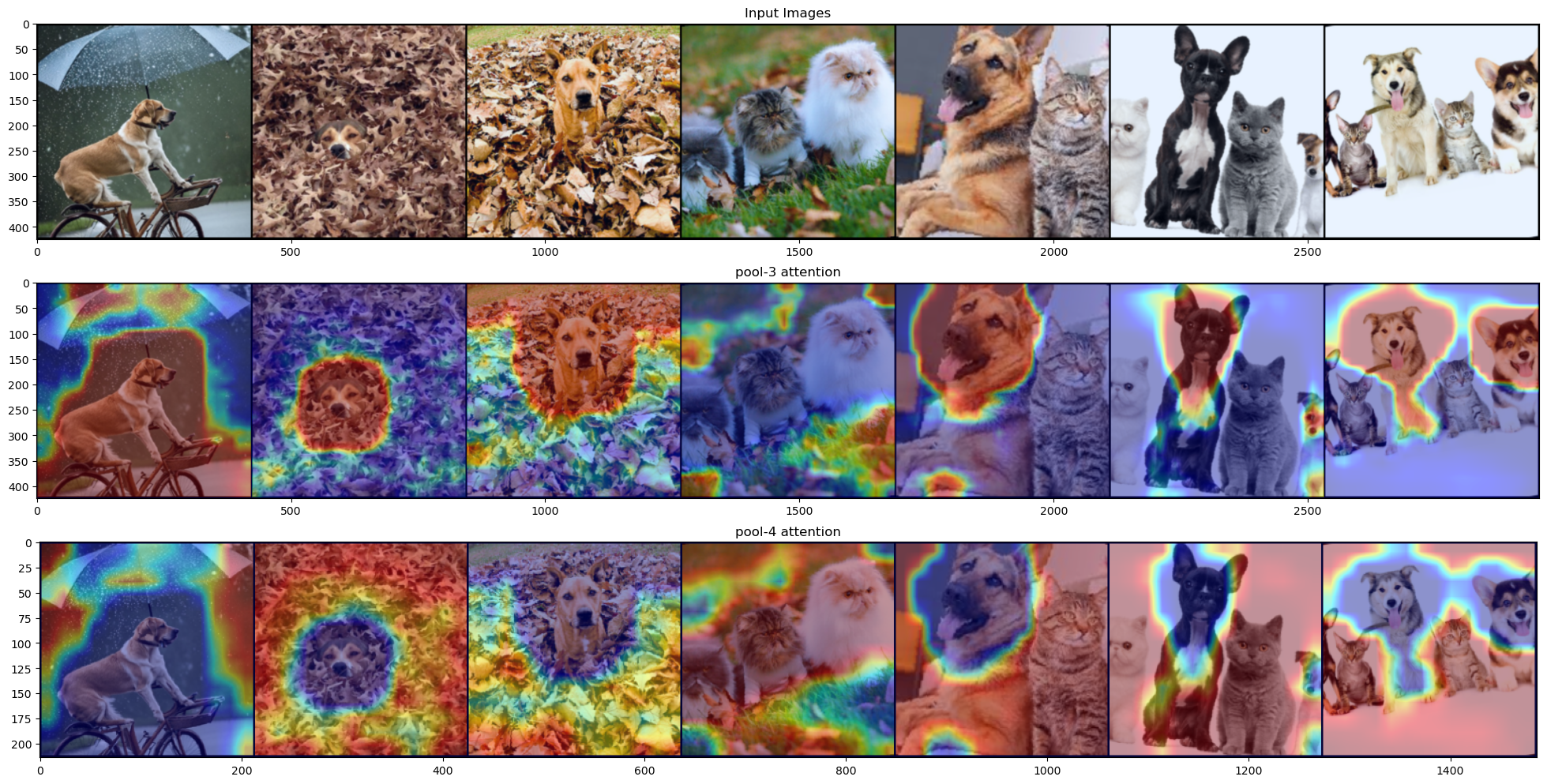

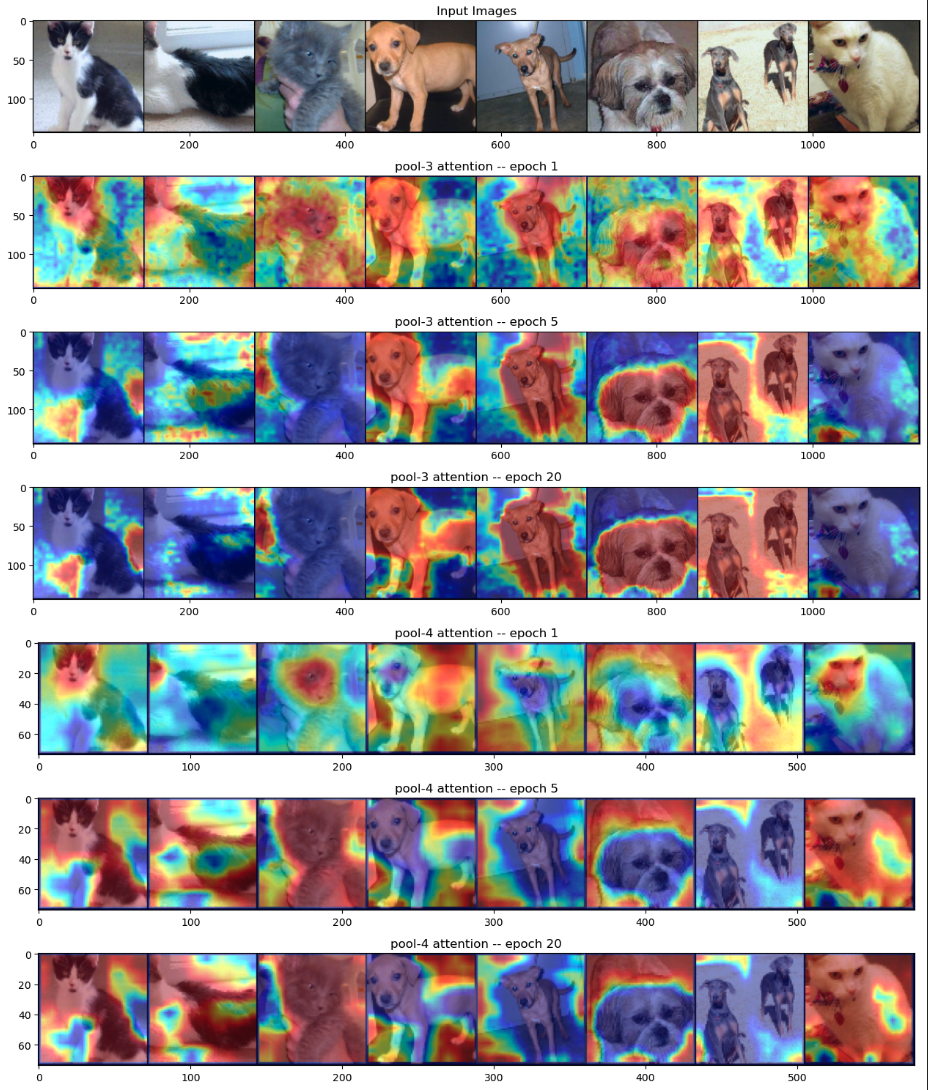

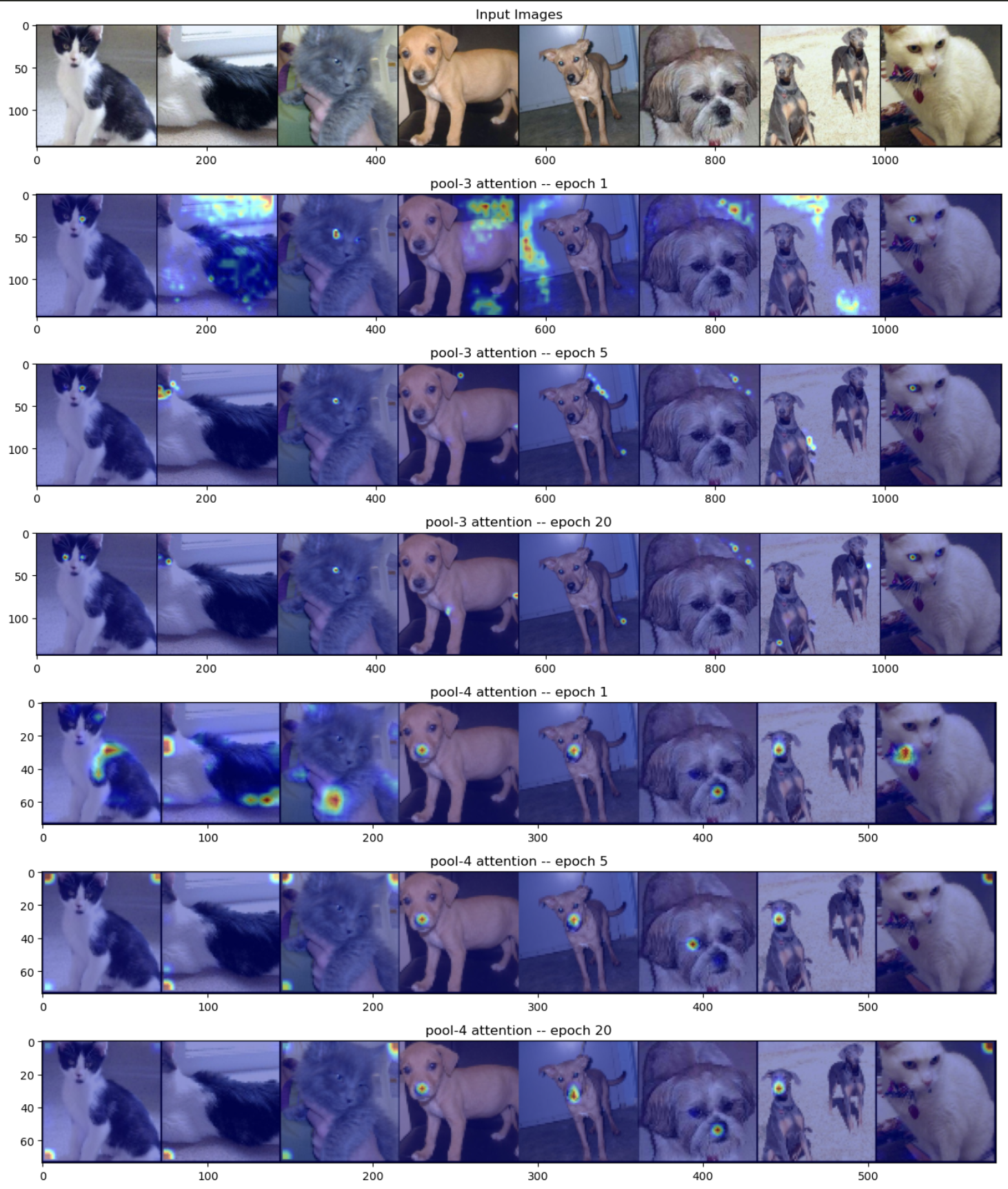

This project delves into an advanced image classification system by incorporating self-attention mechanisms within the transformative architecture of transformers, broadening its application in computer vision. Utilizing a dataset of 10,000 cat and dog images from Kaggle, the system enhances a VGG16 model through self-attention for heightened accuracy. Demonstrating a keen ability to focus on distinctive features of images, such as a dog's snout or a cat's eye, the system significantly improves model interpretability and classification performance.

- Enhanced Discernment of Distinct Features Through The Integration of Self-Attention Mechanism

- Utilization of Kaggle's Cat and Dog Image Dataset

- PyTorch Implementation for Model Training

- FieldComputer Vision, Classification using VGG-16, Dense Layer with Self-Attention

- LibrariesTorch, Torchvision, Grad-CAM

- StackPython

- Sourcegithub.com/krheng14/CNN-with-Self-Attention